Replicating the facial movements

The robot has multiple motions, each supported by an underlying custom designed mechanism powered by closed-loop servo motors. Aimed at precision in movement and accuracy in the same, without generating much a backlash; all the joints are provided little higher than rated power to ensure maximum efficiency of the motors. Modular structure reduces the dependence any one mechanism over the other.

The movable joints of Basanti 1.0 include:

One servo motor drives a two-spur gear arrangement(1:1 gear ratio) with one half of the lip mounted atop each spur gear and make each half converge and diverge simultaneously, just like human lips. The upper lip arrangement is attached to the immovable face by suspending the motor assembly from the rear while the lower lip arrangement is housed inside the jaw which is itself movable - just like a human face.

3D printed rack and pinion arrangement driven by one servo motor has the two eyeballs move two and fro by attaching extensions to the rack

Another powerful servo motor powers this jaw revolute joint that also has the lower lip motion arrangement. It is the most important intricate part of Basanti 1.0 that gives it a human-like appearance.

Pan motion is facilitated by a high torque dual-shaft servo motor that is attached to the complete setup. Tilt motion is difficult to achieve and needs extremely high torque. To power this revolute joint, a geared-DC motor with custom-designed feedback setup is used in order to reduce costs and the required input power.

Face Tracking

Basanti 1.0 has a webcam mounted on its head that acts as its eyes, giving it the visual feed of the surroundings. However, usage of single camera doesn't allow for stereovision which is the primary requirement for depth-perception and focussing- one of the major tasks of the human eye.

Face tracking task achieved by Basanti 1.0 currently shows only the pan motion of the neck to the track the faces in front and keep the face aligned to the centre of its canvas. Future versions of Basanti are envisaged to have multiple axis tracking as well capability of doing the same with the eyes.

Basic Question-Answering

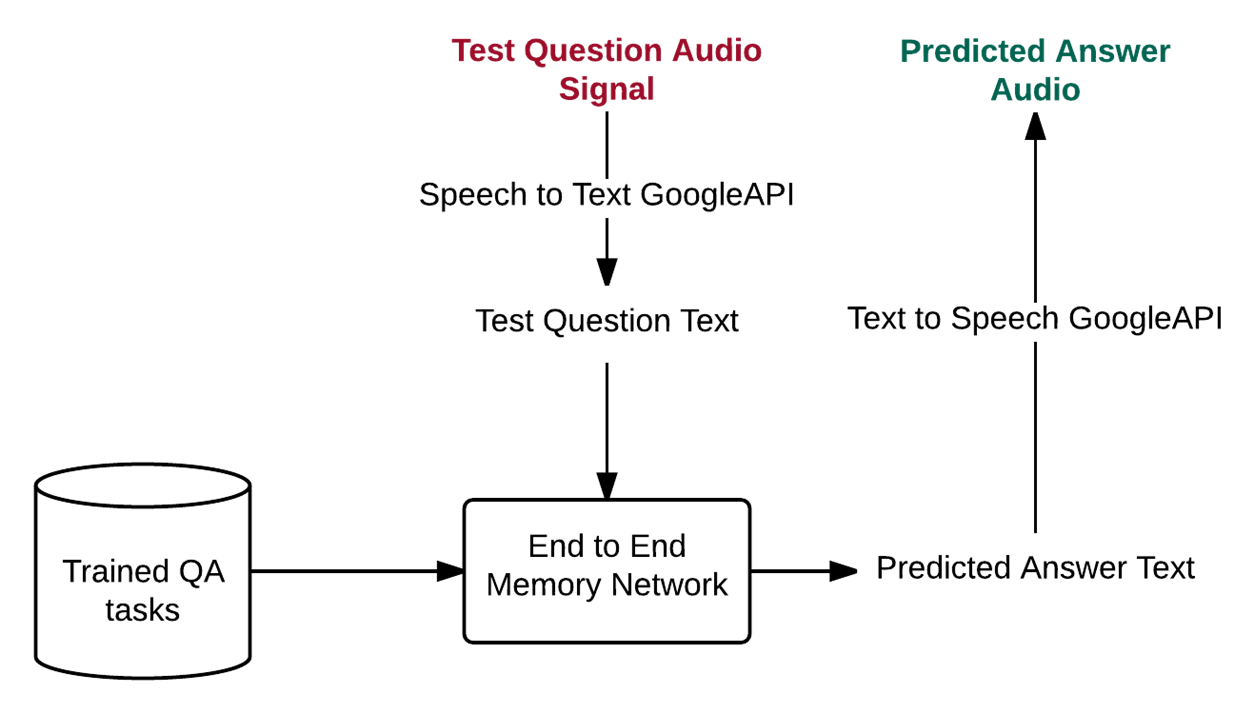

Basanti 1.0 has a mic as well as a speaker that act as the ears and the voice of the robotic face. Running a CNN on the inside, Basanti 1.0 is capable of listening to the user's questions, processing them via various algorithms - from basic keyword search to advanced end to end memory networks and then replying with an answer derived from either the biograpy fed to it or it deduced from the internet via the operational algorithms.

Movement based on Voice commands

Basanti 1.0 responds to the commands it is fed and does all the motions(currently only the prerecorded ones - motions of the neck like turn right, turn left; rolling eyes, openinng and closing jaws etc.) based on keyword search or similarity index search from within the input voice command. Owing to the computational limitations, the system has considerable lag which can be avoided in the future versions.

Face Detection and Recognition

Basanti 1.0 does perform object detection and identification, but the primary task means detecting and recognising human faces out of it. Using popular algorithms like Voila Jones or HOG based recognition and trained using Deep Learning techniques; Basanti 1.0 is capable of facial identification with as less as one training image. Further versions should test in-house developed techniques for better real-time performance that doesn't have the limitations of frontal view/side view or picture quality.